|

Dota - Tianai DongHi! I am a third-year PhD student, affiliated with Multimodal Language Department at the Max Planck Institute for Psycholinguistics, and Predictive Brain Lab at the Donders Institute (Centre for Cognitive Neuroimaging). I am funded by an IMPRS fellowship.

I study how humans acquire, mentally represent, and generate predictions about language through our rich multimodal experiences. My approach combines computational methods with insights from neuroscience, linguistics, and psychology, with the dual goals of understanding the human mind and advancing artificial intelligence.

If you want to discuss any academia-related topics, please feel free to reach out to me :) |

|

|

|

|

2025 Oct

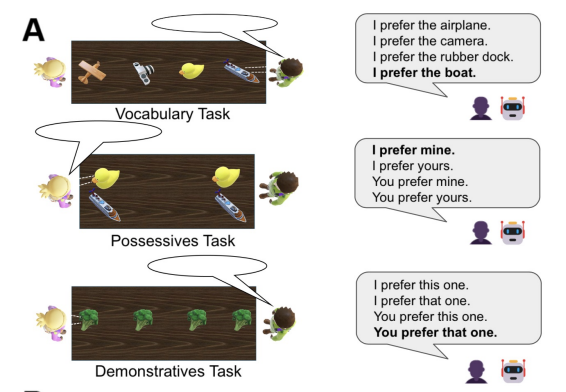

💡 You Prefer This One, I Prefer Yours: Using Reference Words is Harder Than Vocabulary Words for Humans and Multimodal Language Models is presented as a spotlight talk at PragLM@Colm. 2025 May

I gave a talk at CIMEC computational linguistics, on "Grounding Language (and Language Models) by Seeing, Hearing, and Interacting" |

|

|

|

Dota Tianai Dong (co-first), Yifan Luo (co-first), Po-Ya Angela Wang, Asli Ozyurek, Paula Rubio-Fernandez Spotlight💡PragLM@Colm, 2025; Preprint, 2025 We evaluated seven MLMs against humans on three word classes: vocabulary words, possessive pronouns, and demonstrative pronouns. We observed a consistent difficulty hierarchy shared by both humans and models, but a clear performance gap remains: while MLMs approach human-level performance on vocabulary tasks, they show substantial deficits with possessive and demonstrative pronouns. |

|

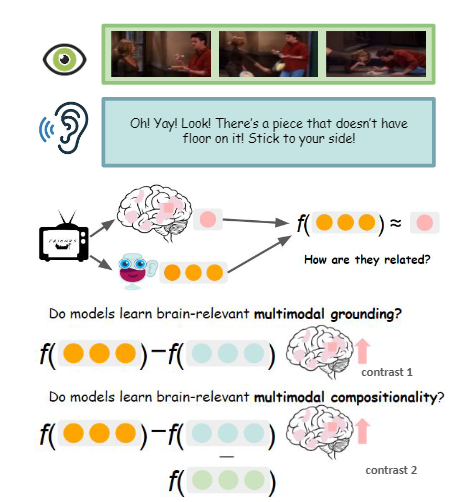

Dota Tianai Dong, Mariya Toneva ICLR-MRL, 2023; CCN, 2023; Preprint, 2024 We propose to probe a pre-trained multimodal video transformer model, guided by insights from neuroscientific evidence on multimodal information processing in the human brain. |

|

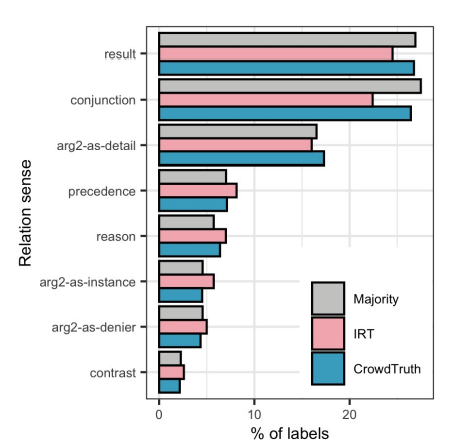

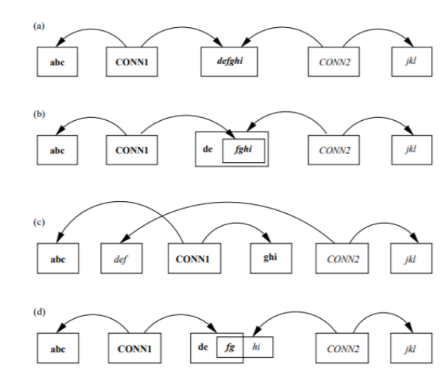

Merel Scholman Dota Tianai Dong, Frances Yung, Vera Demberg LREC, 2022; We present DiscoGeM, a crowdsourced corpus of 6,505 implicit discourse relations from three genres: political speech, literature, and encyclopedic text. |

|

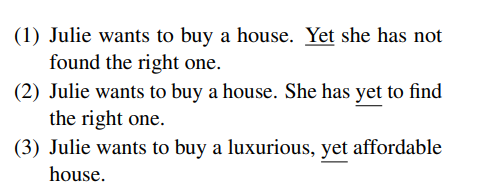

Merel Scholman Dota Tianai Dong, Frances Yung, Vera Demberg CODI, 2021; We assess the performance on explicit connective identification of four parse methods (PDTB e2e, Lin et al., 2014; the winner of CONLL2015, Wang and Lan, 2015; DisSent, Nie et al., 2019; and Discopy, Knaebel and Stede, 2020), along with a simple heuristic. |

|

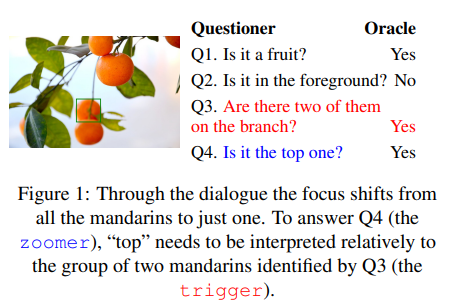

Dota Tianai Dong, Alberto Testoni, Luciana Benotti, Raffaella Bernardi Splurobonlp, 2021; We define and evaluate a methodology for extracting history-dependent spatial questions from visual dialogues. |

|

|

|

|

|

|

Dota Tianai Dong, Bonnie Webber, Jennifer Spenader Bachelor Thesis |

|

Template from here |